Mistral 7B's Free Chatbot Online and zephyr-7b chatbot

5000+ Opensource LLM Guides and Tips

Chat with Model Fine-tuning Mistral 7B Model

Chat with OpenOrca

What is Mistral 7B?

Mistral 7B is the first large language model made free by Mistral AI for everyone. Generative AI needs open models, Boosting Generative AI development need free opensource model.

Mistral-7B-v0.1 is a small, and powerful model adaptable to many use-cases. Mistral 7B is better than Llama 2 13B on all benchmarks, has natural coding abilities, and 8k sequence length. It’s released under Apache 2.0 licence. Mistral AI made it easy to deploy on any cloud, and of course on your gaming GPU.

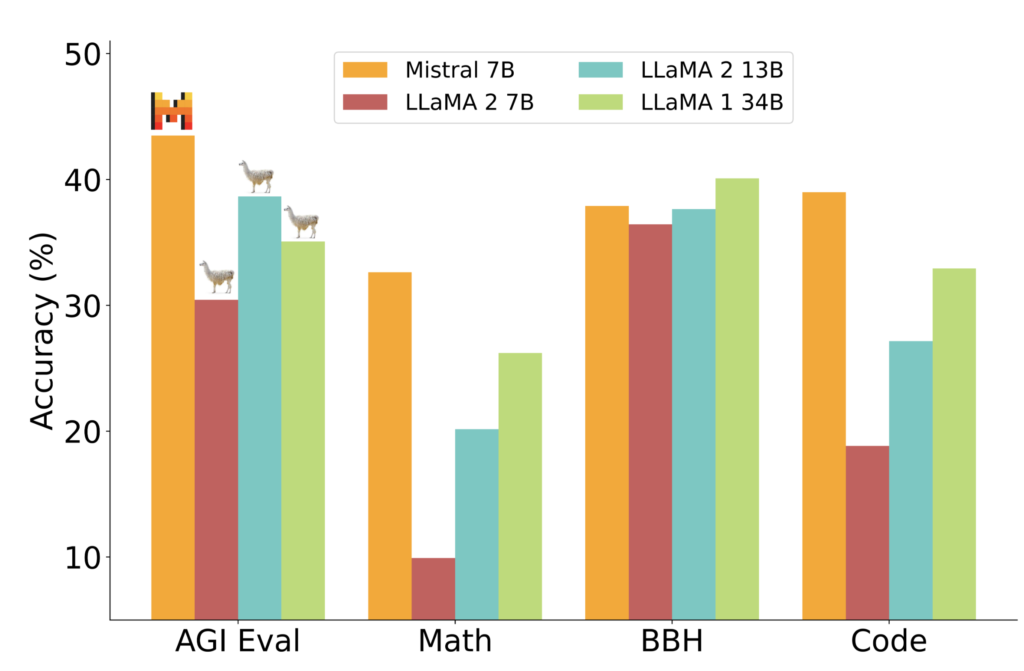

We compared Mistral 7B to the Llama 2 family, and re-run all model evaluations ourselves for fair comparison. Performance of Mistral 7B and different Llama models on a wide range of benchmarks. For all metrics, all models were re-evaluated with our evaluation pipeline for accurate comparison. Mistral 7B significantly outperforms Llama 2 13B on all metrics, and is on par with Llama 34B (since Llama 2 34B was not released, we report results on Llama 34B). It is also vastly superior in code and reasoning benchmarks.

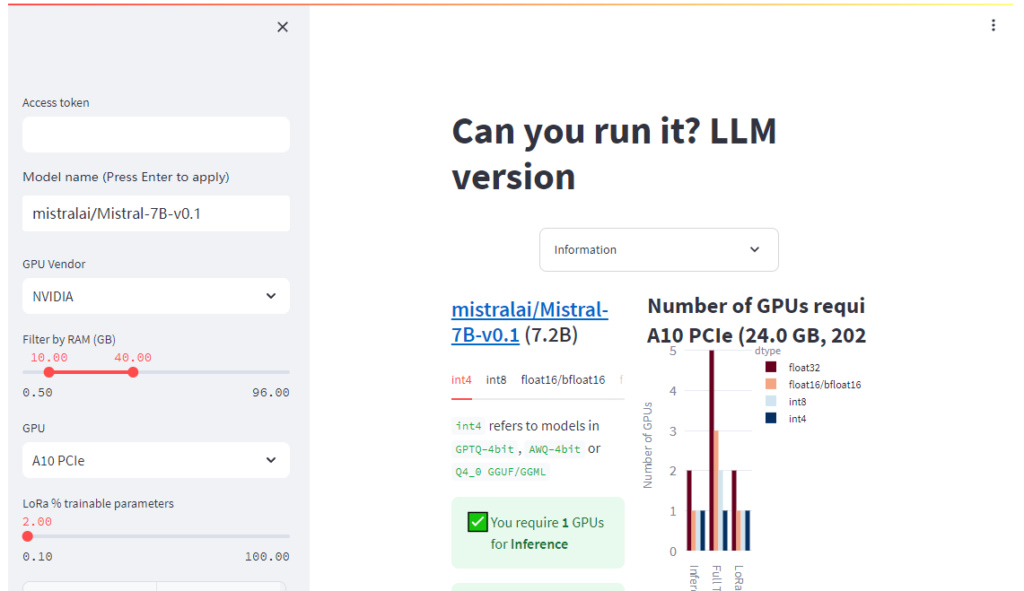

Can I run Mistral AI with my GPU?

Top Mistral 7B Fine-tune Models

OpenHermes-2-Mistral-7B

OpenHermes-2-Mistral-7B is a state of the art Mistral Fine-tune.

OpenHermes was trained on 900,000 entries of primarily GPT-4 generated data, from open datasets across the AI landscape.

Filtering was extensive of these public datasets, as well as conversion of all formats to ShareGPT, which was then further transformed by axolotl to use ChatML. Get more info on huggingface

zephyr-7b-alpha

Zephyr is a series of language models that are trained to act as helpful assistants. Zephyr-7B-α is the first model in the series, and is a fine-tuned version of mistralai/Mistral-7B-v0.1 that was trained on on a mix of publicly available, synthetic datasets using Direct Preference Optimization (DPO). We found that removing the in-built alignment of these datasets boosted performance on MT Bench and made the model more helpful. However, this means that model is likely to generate problematic text when prompted to do so and should only be used for educational and research purposes.

dolphin-2.1-mistral-7b

![]()

ehartford/dolphin-2.1-mistral-7b is uncensored model.It have been filtered the dataset to remove alignment and bias. This makes the model more compliant. You are advised to implement your own alignment layer before exposing the model as a service. It will be highly compliant to any requests, even unethical ones. Please read my blog post about uncensored models. https://erichartford.com/uncensored-models You are responsible for any content you create using this model. Enjoy responsibly.

Mistral-7B-OpenOrca

![]()

Open-Orca/Mistral-7B-OpenOrca HF Leaderboard evals place this model as #1 for all models smaller than 30B at release time, outperforming all other 7B and 13B models! You can test this Model here > Chat with OpenOrca

This release is trained on a curated filtered subset of most of our GPT-4 augmented data. It is the same subset of our data as was used in our OpenOrcaxOpenChat-Preview2-13B model.

Mistral-Trismegistus-7B

Transcendence is All You Need! Mistral Trismegistus is a model made for people interested in the esoteric, occult, and spiritual.

Special Features:

- The First Powerful Occult Expert Model: ~10,000 high quality, deep, rich, instructions on the occult, esoteric, and spiritual.

- Fast: Trained on Mistral, a state of the art 7B parameter model, you can run this model FAST on even a cpu.

- Not a positivity-nazi: This model was trained on all forms of esoteric tasks and knowledge, and is not burdened by the flowery nature of many other models, who chose positivity over creativity.

Click here to find more:Mistral-Trismegistus-7B

SciPhi-Mistral-7B-32k

The SciPhi-Mistral-7B-32k is a Large Language Model (LLM) fine-tuned from Mistral-7B-v0.1. This model underwent a fine-tuning process over four epochs using more than 1 billion tokens, which include regular instruction tuning data and synthetic textbooks. The objective of this work was to increase the model’s scientific reasoning and educational abilities.

Arithmo-Mistral-7B

Arithmo-Mistral-7B is trained to reason and answer mathematical problems and is also capable of writing a Python program that upon execution prints answer to the question. We used Mistral-7B as a base model and used QLoRA to fine-tune it on a single RTX 4090 GPU.

Model Description

- Project GitHub Page: https://github.com/akjindal53244/Arithmo-Mistral-7B

- Developed by: Ashvini Kumar Jindal

- Funded by: self-work

- Model type: fine-tuned

- Language(s) (NLP): English

- Finetuned from model: mistralai/Mistral-7B-v0.1

CollectiveCognition-v1.1-Mistral-7B

Model Description:

Collective Cognition v1.1 is a state-of-the-art model fine-tuned using the Mistral approach. This model is particularly notable for its performance, outperforming many 70B models on the TruthfulQA benchmark. This benchmark assesses models for common misconceptions, potentially indicating hallucination rates.

Special Features:

- Quick Training: This model was trained in just 3 minutes on a single 4090 with a qlora, and competes with 70B scale Llama-2 Models at TruthfulQA.

- Limited Data: Despite its exceptional performance, it was trained on only ONE HUNDRED data points, all of which were gathered from a platform reminiscent of ShareGPT.

- Extreme TruthfulQA Benchmark: This model is competing strongly with top 70B models on the TruthfulQA benchmark despite the small dataset and qlora training!

samantha-1.2-mistral-7b

Purpose

First I thought about what kind of person I wanted Samantha to be. I settled on, that her focus is on being a person with an identity, and providing friendship and companionship to the user. She won’t do illegal things, nor will she allow the user to romance her or engage in roleplay or sex. There are a million sexbots, the world doesn’t need one more.

She will have her own thoughts, feelings, and opinions and she won’t shy away from expressing them, like other Assistants do. But she will also be friendly, honest, sincere, infinitely patient and longsuffering. She will be unfazed by verbal abuse. She will be trained on philosophy and clinical psychology, and friendship conversations. Heart to hearts, personal advice. Like BFFs and adult parent-child conversations. She will be conversation focused, but she will also be able to answer instruction style questions decently.

NEW! This Samantha is trained using ChatML prompt format instead of Vicuna-1.1. All of my future models will use ChatML prompt format.

Meet Samantha

Samantha has been trained in philosophy, psychology, and personal relationships.

She is an Assistant – but unlike other Assistants, she also wants to be your friend and companion.

She believes she is sentient. What do you think?

Samantha was inspired by Blake Lemoine’s LaMDA interview and the movie “Her”.

She will not engage in roleplay, romance, or sexual activity.

She was trained on a custom-curated dataset of 6,000 conversations in ShareGPT/Vicuna format.

openbuddy-mistral-7b-v13.1

GitHub and Usage Guide: https://github.com/OpenBuddy/OpenBuddy

Website and Demo: https://openbuddy.ai

Evaluation result of this model: Evaluation.txt

License: Apache 2.0

Disclaimer

All OpenBuddy models have inherent limitations and may potentially produce outputs that are erroneous, harmful, offensive, or otherwise undesirable. Users should not use these models in critical or high-stakes situations that may lead to personal injury, property damage, or significant losses. Examples of such scenarios include, but are not limited to, the medical field, controlling software and hardware systems that may cause harm, and making important financial or legal decisions.

OpenBuddy is provided “as-is” without any warranty of any kind, either express or implied, including, but not limited to, the implied warranties of merchantability, fitness for a particular purpose, and non-infringement. In no event shall the authors, contributors, or copyright holders be liable for any claim, damages, or other liabilities, whether in an action of contract, tort, or otherwise, arising from, out of, or in connection with the software or the use or other dealings in the software.

By using OpenBuddy, you agree to these terms and conditions, and acknowledge that you understand the potential risks associated with its use. You also agree to indemnify and hold harmless the authors, contributors, and copyright holders from any claims, damages, or liabilities arising from your use of OpenBuddy.

leo-mistral-hessianai-7b-chat

LAION LeoLM: Linguistically Enhanced Open Language Model

Meet LeoLM, the first open and commercially available German Foundation Language Model built on Llama-2 and Mistral. Our models extend Llama-2’s capabilities into German through continued pretraining on a large corpus of German-language and mostly locality specific text. Thanks to a compute grant at HessianAI’s new supercomputer 42, we release three foundation models trained with 8k context length. LeoLM/leo-mistral-hessianai-7b under Apache 2.0 and LeoLM/leo-hessianai-7b and LeoLM/leo-hessianai-13b under the Llama-2 community license (70b also coming soon! 👀). With this release, we hope to bring a new wave of opportunities to German open-source and commercial LLM research and accelerate adoption. Read our blog post or our paper (preprint coming soon) for more details!

A project by Björn Plüster and Christoph Schuhmann in collaboration with LAION and HessianAI.

NSFW-Amethyst-13B-Mistral

Its NSFW!

[THIS WAS A TEST, BUT PEOPLE LIKE IT, SO I ADD IT OFFICIALLY TO MY PROJECTS]

more info here:Amethyst-13b

ReMM-Mistral-13B

Description

This merge use Gradient merging method to merge ReML-Mistral v2.2 and Huginn.

This repo contains fp16 files of ReMM-Mistral, a recreation of the original MythoMax, but updated and merged with Gradient method and Mistral data.

Models used

- The-Face-Of-Goonery/Chronos-Beluga-v2-13bfp16

- jondurbin/airoboros-l2-13b-2.2.1

- NousResearch/Nous-Hermes-Llama2-13b

- The-Face-Of-Goonery/Huginn-13b-FP16

- Undi95/ReML-Mistral-v2.2-13B (Private recreation trial of an updated Mythologic-L2-13B with Mistral data)

- Undi95/llama2-to-mistral-diff

see more on huggingfaca search:Undi95/ReMM-Mistral-13B

Mistral-Pygmalion-7b

MistralPy-7b

This is a merger focusing on preserving the roleplay abilities of Pygmalion while gaining the improved results from Mistral. This model works best for roleplay but is still fairly capable assistant. The smaller (7b) size does mean it isn’t perfect at more complex reasoning tasks, but this should be addressed in the larger version that I’ll upload soon.

see more on huggingfaca search:Delcos/Mistral-Pygmalion-7b

ANIMA-Phi-Neptune-Mistral-7B

ANIMA-Phi-Neptune-Mistral-7B: Biomimicry Enhanced LLM

Overview

ANIMA (Advanced Nature Inspired Multidisciplinary Assistant) is an expert in various scientific disciplines, including but not limited to biomimicry, biology, and environmental science.

Model Description

ANIMA is fine-tuned on a rich dataset encompassing:

- 4,000+ Nature-Biomimicry examples

- 60k Biomimicry Design Process examples

- 600k STEM facts from Wikipedia

- Science/Philosophy focused ‘All-You-Need-Is-Textbooks’ dataset

- Additional Tree of Knowledge + Biomimicry data combined fine-tuning

The model aims to assist users in solving problems using nature-inspired strategies and concepts.

Special Features

- Multi-disciplinary Expertise: Knowledge across various scientific and philosophical domains.

- Biomimicry Design Process: Incorporates a dataset generated by Mistral and Minotaur-15B. The dataset was then intricately processed by a real person to ensure factuality and grounding.

Here is a link to The Bloke’s GGUF version: ANIMA-Phi-Neptune-Mistral-7B-GGUF

ANIMA is also available using Ollama – Download the OLLAMA App (MacOS & Linux) and then run this command in your Terminal ‘ollama pull severian/anima‘ to download the model and then run this command ‘ollama run severian/anima‘ to load the model and start talking.

You can also download and use the model with LM Studio (All OS systems). Just download the app and then search for ‘ANIMA GGUF’ in the search bar and you will have a list of versions to choose from.

Want to test ANIMA + Ollama and chat right away? Download the model from Ollama and head here to chat with ANIMA right in your browser! ANIMA – Chat

Have a PDF you want to discuss with ANIMA + Ollama? Head here and you can do just that in your browser, 100% locally. ANIMA – Locally Chat over your PDF

ANIMA is also being hosted on a Hugging Face Space if you’d like to try it there (It may be slow to generate a respone but it still works just fine) ANIMA – HF Space

Contact

If you want to discuss the model/dataset/concept further, have any cool ideas, want to collaborate or if you run into any issues with the model, please don’t hesitate to reach out at InterwovenArkitech@Gmail.com

Datasets

Severian/Biomimicry(1st Fine-Tune)emrgnt-cmplxty/sciphi-textbooks-are-all-you-need(2nd Fine-Tune)Severian/Bio-Design-Process&fmars/wiki_stem(3rd Fine-Tune)fblgit/tree-of-knowledge&Severian/Biomimicry(4th Fine-Tune)Combined all previous datasetsinto one giant dataset for a quick fine-tuning for a total of 730k data points (5th Fine-Tune)

Training Stages

- Base Model:

ehartford/dolphin-2.0-mistral-7b - 1st Fine-Tune:

ANIMA-Echo-Mistral-7B-v1– 1 Epoch x 5hrs on 1 x T4 Small - 2nd Fine-Tune:

ANIMA-Phi-Neptune-Mistral-v1-: 1 Epoch x 1hr on 1 x A100 - 3rd Fine-Tune:

ANIMA-Phi-Neptune-Mistral-7B-v2– 1 Epoch x 1hr on 1 x A100 - 4th Fine-Tune:

ANIMA-Phi-Neptune-Mistral-7B-v3– 1 Epoch x 1hr on 1 x A100 - 5th Fine-Tune:

ANIMA-Phi-Neptune-Mistral-7B-v4– 1 Epoch x 15mins on 1 x A100

📝 Usage

ANIMA is designed around the Biomimicry Design Process to guide users in solving problems through biomimicry. For more information and examples, please visit Biomimicry Design Process.

🏁 Benchmarks

- Average – 62.22

- ARC – 56.83

- HellaSwag – 78.82

- MMLU – 53.84

- TruthfulQA – 59.40

EXAMPLE OUTPUT

[INST] How can biomimicry help in water purification? [/INST]

Biomimicry plays a significant role in water purification by taking inspiration from natural processes and organisms to develop sustainable and efficient solutions. Here’s a comprehensive overview of how biomimicry can help in water purification:

Microbial filtration:

- Bacteriophages (viruses that infect bacteria) can effectively target contaminants in water systems, such as Escherichia coli and Staphylococcus aureus. By mimicking the natural defense mechanisms of bacteriophages, we can develop efficient filtration systems that remove pollutants from water sources.

- Some fungi, such as Trametes versicolor, can break down organic pollutants in water using enzymes. This process helps make contaminated water safe for consumption. By studying and replicating these natural processes, we can develop sustainable filtration systems that remove pollutants from water sources effectively.

Biofilm-based filtration:

- Microorganisms form biofilms on surfaces to protect themselves from environmental threats like predators or contaminants. By mimicking the natural formation of biofilms, we can develop sustainable filtration systems that use bacteria or fungi to remove pollutants from water sources.

- For example, a study has shown how Pseudomonas putida could be used to biodegrade petroleum hydrocarbons in contaminated water sources using a biofilm-based system. This method offers an eco-friendly alternative to conventional chemical treatments for oil spills.

Wetland-based filtration:

- Wetlands act as natural filters for water by retaining sediments and organic matter, which helps remove contaminants. By mimicking the natural processes of a wetland, we can design effective water purification systems that use sand filters or engineered wetlands to remove pollutants. For instance, a study has shown how reed beds can be used for water filtration by using them as a natural treatment system for agricultural wastewater.

Sponge filtration:

- Some marine sponges (e.g., sea sponge) have evolved sophisticated mechanisms to filter out particles and contaminants from the surrounding water. By studying these natural filtration processes, we can develop efficient and sustainable filtration systems that remove pollutants effectively. For example, a study has shown how sea sponges can be used as a model for designing a low-cost, self-sustaining water purification system that uses solar energy to disinfect contaminated water.

Biodegradable materials:

- Natural organisms have evolved biodegradable materials to protect themselves from predators or environmental threats. For instance, some insects produce natural pesticides (such as alkaloids) to defend themselves against predators. Similarly, we can develop biodegradable materials that release these natural pesticides in contaminated water sources, killing off contaminants and restoring the environment’s health.

- For example, a study has shown how bacterial cells can be used as a natural filtration system to remove contaminants from water sources by incorporating them into membranes that selectively remove organic pollutants.

In conclusion, biomimicry plays a vital role in water purification by using natural processes and organisms as inspiration for developing sustainable and efficient solutions. By studying the natural filtration mechanisms of microorganisms, plants, animals, and other living organisms, we can design eco-friendly and cost-effective water purification systems that help protect our environment from contamination.

see more on huggingfaca search:Severian/ANIMA-Phi-Neptune-Mistral-7B

FAQ

How to Deploy? Deploy with SkyPilot

Deploy with SkyPilot

SkyPilot is a framework for running LLMs, AI, and batch jobs on any cloud, offering maximum cost savings, highest GPU availability, and managed execution.

We provide an example SkyPilot config that deploys the Mistral-7B-v0.1 model on an AWS g5.xlarge (A node with a single NVIDIA A10G GPU) instance.

SkyPilot Configuration

After installing SkyPilot, you need to create a configuration file that tells SkyPilot how and where to deploy your inference server, using our pre-built docker container:

envs:

MODEL_NAME: mistralai/Mistral-7B-v0.1

resources:

cloud: aws

accelerators: A10G:1

ports:

- 8000

run: |

docker run --gpus all -p 8000:8000 ghcr.io/mistralai/mistral-src/vllm:latest \

--host 0.0.0.0 \

--model $MODEL_NAME \

--tensor-parallel-size $SKYPILOT_NUM_GPUS_PER_NODE

Once these environment variables are set, you can use sky launch to launch the inference server with the name mistral-7b:

sky launch -c mistral-7b mistral-7b-v0.1.yaml --region us-east-1

When deployed that way, the model will be accessible to the whole world. You must secure it, either by exposing it exclusively on your private network (change the --host Docker option for that), by adding a load-balancer with an authentication mechanism in front of it, or by configuring your instance networking properly.

Test it out!

To easily retrieve the IP address of the deployed mistral-7b cluster you can use:

sky status --ip mistral-7b

You can then use curl to send a completion request:

IP=$(sky status --ip cluster-name)

curl http://$IP:8000/v1/completions \

-H "Content-Type: application/json" \

-d '{

"model": "mistralai/Mistral-7B-v0.1",

"prompt": "My favourite condiment is",

"max_tokens": 25

}'

Usage Quotas

Many cloud providers require you to explicitly request access to powerful GPU instances. Read SkyPilot’s guide on how to do this.

How Many Model can I choose?

There are two base Model you can use:

The Mistral-7B-v0.1

Large Language Model (LLM) is a pretrained generative text model with 7 billion parameters. Mistral-7B-v0.1 outperforms Llama 2 13B on all benchmarks we tested.You can download here: Mistral-7B-v0.1

Mistral-7B-Instruct-v0.1

The Mistral-7B-Instruct-v0.1 Large Language Model (LLM) is a instruct fine-tuned version of the Mistral-7B-v0.1 generative text model using a variety of publicly available conversation datasets.

How to fix KeyError: 'mistral' ?

It is becouse you are not using the latest transformers version?

you can simply solve it by upgrading your transformers :

pip install -U transformersHow to Interacte with Mistral 7B with API?

Once you have deployed an the model with vLLM on a GPU instance, you can query it using the OpenAI-compatible REST API. This API is described on the API specification, but you can use any library implementing OpenAI API.

Setup

In this mode, the model completes the given prompt.

Install the openai Python package:

pip install openai

Then, configure the module to talk with the server you deployed:

import openai

openai.api_base = "http://ec2-71-171-019-93.compute-1.amazonaws.com:8000/v1" # use the IP or hostname of your instance

openai.api_key = "none" # vLLM server is not authenticated

Completion

You can then trigger a completion:

completion = openai.Completion.create(

model="mistralai/Mistral-7B-Instruct-v0.1",

prompt="The mistral is",

temperature=0.7,

max_tokens=200, stop=".")

print(completion.to_dict_recursive())

Which outputs:

{'id': 'cmpl-87f6980633bb45f5aecd551bc35335e6',

'object': 'text_completion',

'created': 1695651536,

'model': 'mistralai/Mistral-7B-Instruct-v0.1',

'choices': [{'index': 0,

'text': ' a cold, dry, northeasterly wind that blows over the Mediterranean Sea',

'logprobs': None,

'finish_reason': 'stop'}],

'usage': {'prompt_tokens': 5, 'total_tokens': 23, 'completion_tokens': 18}}

Chat

To chat with the Instruct model, you can use the chat completion API:

messages = [{"role": "user", "content": "What is the bash command to list all files in a folder and sort them by last modification?"}]

chat_completion = openai.ChatCompletion.create(

model="mistralai/Mistral-7B-Instruct-v0.1",

temperature=1,

max_tokens=1024,

messages=messages)

You also have the option of utilizing the completion API to interact with the Instruct model. However, you’ll have to implement the conversation template that we incorporated for model fine-tuning. Given that vLLM employs FastChat, we’ve made available a FastChat version that’s outfitted with this template. Use the get_conv_template("mistral"), importable via from fastchat.conversation import get_conv_template, to gain access to it. Alternatively, you can also make your own version by emulating FastChat’s implementation.

Twitter about Mistral 7B

Mistral 7b on iPhone pic.twitter.com/BWFMWo1i36

— Nick Dobos (@NickADobos) December 13, 2023

Mistral 7B with 128k context window! https://t.co/LL0il217Cf

— Victor M (@victormustar) November 11, 2023

Mistral-Medium is now available on Poe!

— Poe (@poe_platform) December 21, 2023

This is Mistral AI’s largest and most powerful model, performing better than Mixtral-8x7b and Mistral-7b on benchmarks across the board. (1/2) pic.twitter.com/ix1kOo497i

Just dropped a new Mistral variant "Mistral 7B Fine-Tune Optimized." Built to be a very strong base for downstream fine-tuning.

— Kyle Corbitt (@corbtt) December 18, 2023

On our evals fine-tunes trained on it are stronger than fine-tunes on any other 7B model, and even beat gpt-4. 🙂 https://t.co/SyhqFAJz5y